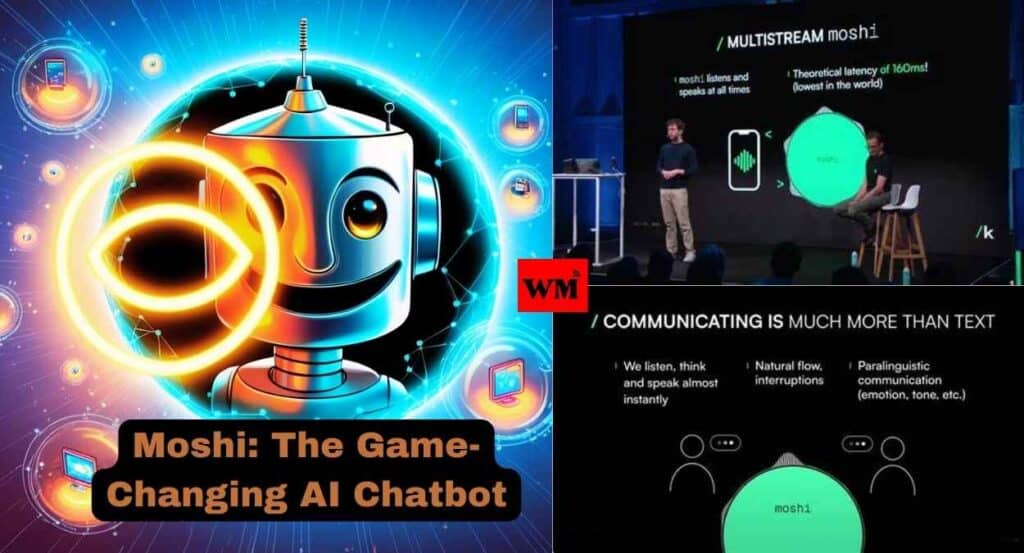

Moshi: The AI That Feels Your Vibe and Talks Back

In the fast-paced world of artificial intelligence, a new star is rising. Moshi, an innovative AI chatbot developed by the French company Kyutai, is making waves with its groundbreaking features. This isn’t just another run-of-the-mill virtual assistant – Moshi is bringing a whole new level of interaction to the table, potentially outshining even the mighty ChatGPT.

Imagine having a conversation with a computer that not only understands your words but picks up on the subtle nuances in your voice. Picture an AI that responds in the blink of an eye, speaks with a variety of accents, and even works when you’re offline. Sounds like something out of a sci-fi movie, right? Well, Moshi is turning this futuristic vision into reality.

In this article, we’ll dive deep into what makes Moshi tick, how it stacks up against its competitors, and why it might just be the next big thing in AI. So buckle up, folks – we’re about to take a journey into the cutting-edge world of voice-enabled AI assistants.

What Sets Moshi Apart from the Crowd?

When it comes to AI chatbots, Moshi isn’t just playing catch-up – it’s setting the pace. Here’s what makes this French AI creation stand out:

- Emotional Intelligence: Moshi doesn’t just hear your words; it listens to your tone. This AI can pick up on the emotions in your voice, adding a whole new dimension to your interactions.

- Speed Demon: With responses coming in at a lightning-fast 200 milliseconds, Moshi keeps conversations flowing as naturally as chatting with a friend.

- Accent Aficionado: Whether you prefer a British accent or an Australian twang, Moshi’s got you covered with its ability to speak in various accents.

- Emotional Range: Moshi isn’t just a monotone robot. It can express itself in 70 different emotional styles, making conversations more engaging and lifelike.

- Works Without Wi-Fi: Unlike many AI assistants that go quiet when you lose internet, Moshi can keep chatting even when you’re offline.

- Multitasking Marvel: Moshi can handle two audio streams at once, meaning it can listen and respond simultaneously – just like in a real conversation.

The Brains Behind Moshi

Kyutai, the company that birthed Moshi, took a different approach to AI development. Instead of relying on massive amounts of data and computing power, they focused on efficiency and innovation. Here’s the scoop on how Moshi came to be:

- Small but Mighty: Moshi is built on the Helium 7B model, which is relatively compact compared to some AI giants.

- Speedy Development: A small team of just eight researchers brought Moshi to life in only six months. Talk about productivity!

- Synthetic Learning: Moshi honed its skills on over 100,000 synthetic dialogues created using Text-to-Speech technology.

- Professional Polish: To give Moshi a more natural sound, Kyutai teamed up with a professional voice artist.

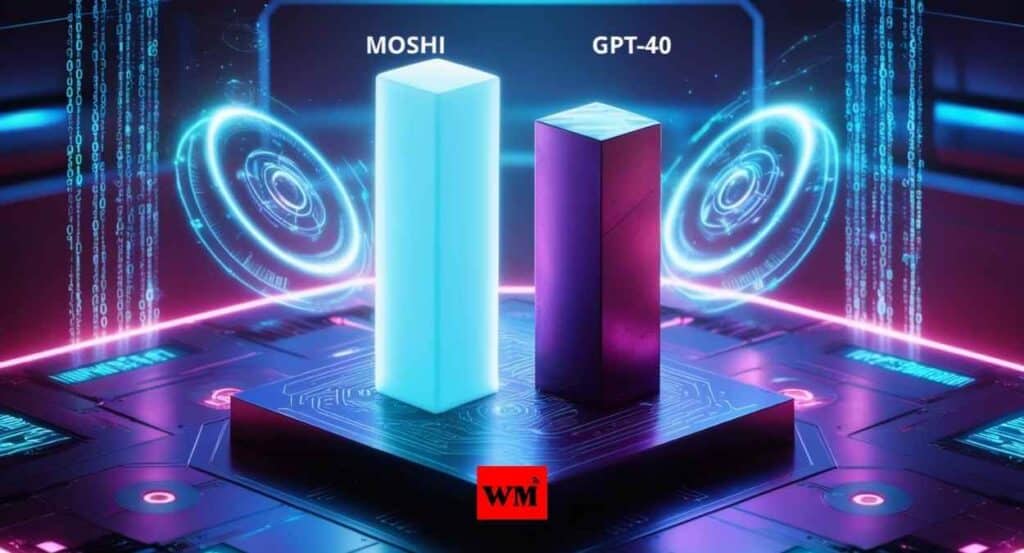

Moshi vs. GPT-4o: David and Goliath?

While OpenAI‘s GPT-4o has been making headlines with its advanced capabilities, Moshi is stepping up as a worthy challenger. Let’s see how these AI powerhouses compare:

| Feature | Moshi | GPT-4o |

|---|---|---|

| Response Time | 200 milliseconds | 232-320 milliseconds |

| Offline Use | Yes | No |

| Emotional Recognition | Yes | Limited |

| Open Source | Planned | No |

| Accent Variety | Multiple | Limited |

As you can see, Moshi holds its own against the bigger player, even outperforming in some areas.

Under the Hood: Moshi’s Tech Specs

Moshi isn’t just impressive on the surface – its underlying technology is equally fascinating:

- Helium 7B Model: This forms the backbone of Moshi’s language processing abilities.

- Multimodal Magic: Moshi seamlessly blends text and audio training for a more comprehensive understanding.

- Hardware Friendly: With support for CUDA, Metal, and CPU backends, plus 4-bit and 8-bit quantization, Moshi can run smoothly on various devices.

- Real-Time Prowess: An end-to-end latency of just 200 milliseconds allows for natural, flowing conversations.

Moshi in Action: What Can This AI Whiz Do?

Curious about what Moshi can actually do in the real world? Here are some examples of its capabilities:

- Improv Master: Ask Moshi to play different characters or spin a yarn, and watch its creativity shine.

- Jack of All Trades: From walking you through a recipe to answering trivia questions, Moshi’s got you covered.

- Digital Shoulder to Cry On: With its ability to understand and respond to emotions, Moshi could potentially serve as a comforting digital companion.

- Language Learning Buddy: Practice conversations in different accents to polish your language skills.

The Future Looks Bright for Moshi

Kyutai has big plans for Moshi that could shake up the AI landscape:

- Open Source Revolution: By making Moshi’s code and framework available to all, Kyutai aims to spark innovation and transparency in AI development.

- Sound Check: Future updates will include AI audio identification, watermarking, and signature tracking systems.

- Industry Influencer: Moshi’s capabilities could inspire new features in other voice-enabled AI assistants like Alexa or Google Assistant.

Want to Chat with Moshi? Here’s How!

Ready to experience Moshi for yourself? Follow these simple steps:

- Head over to us.moshi.chat

- Follow the on-screen prompts to start your chat

- You’ll have up to 5 minutes to explore Moshi’s capabilities

- Try out different tones, accents, and questions to see what Moshi can do

Remember, Moshi is still in its experimental phase, so approach your chat with an open mind and a sense of adventure!

Food for Thought: What Moshi Means for the Future of AI

Moshi’s arrival on the scene raises some intriguing questions about where AI is headed:

- AI for Everyone: Could Moshi’s offline capabilities and efficient design make advanced AI more accessible to a wider audience?

- Privacy in the Age of AI: How will Moshi’s ability to understand emotions and tone impact user privacy?

- David vs. Goliath: Will Moshi’s open-source approach challenge the dominance of closed AI systems like ChatGPT?

- Ethical AI: As AI becomes more human-like in its interactions, what new ethical guidelines might we need to consider?

FAQs: Everything You Wanted to Know About Moshi

Q. How does Moshi’s ability to understand emotions work?

A. Moshi uses advanced audio processing to analyze the tone, pitch, and rhythm of your voice, allowing it to interpret emotional cues. However, it’s important to remember that while Moshi can recognize and respond to emotions, it doesn’t actually feel them like humans do.

Q. Can Moshi speak languages other than English?

A. While the current information doesn’t specify language availability beyond English, Moshi’s ability to handle various accents suggests that multilingual support could be on the horizon.

Q. How does Moshi’s development process differ from that of ChatGPT?

A. Moshi was developed by a smaller team in a shorter timeframe, focusing on specific features like emotional intelligence and offline capabilities. In contrast, ChatGPT’s development involved a larger team and a broader approach to language understanding.

Q. What kind of computer do I need to run Moshi?

A. One of Moshi’s selling points is its ability to run on consumer-grade hardware, including MacBooks. This makes it more accessible than some other AI models that require more powerful systems.

Q. How is Kyutai addressing potential safety and ethical concerns with Moshi?

A. Kyutai is developing features like audio watermarking and plans to make Moshi open-source. This could help address safety and ethical concerns by allowing for community oversight and transparency in development.

Q. What are some potential real-world applications for Moshi beyond casual conversation?

A. Moshi could potentially be used in customer service to provide more empathetic interactions, in language learning programs to help with accent practice, or in mental health support systems as a first line of emotional support.

Q. How does Moshi’s offline capability work, and what are its limitations?

A. Moshi’s ability to function offline is due to its efficient design and ability to run locally on a device. However, this may limit some functionalities that require real-time data or updates. The full extent of its offline capabilities is still being explored.

Conclusion

Moshi is more than just another AI chatbot – it’s a glimpse into the future of human-AI interaction. With its blend of emotional intelligence, lightning-fast responses, and versatile communication skills, this French AI creation is pushing the boundaries of what we thought possible in artificial intelligence.

As Moshi continues to evolve, it has the potential to revolutionize everything from customer service to language learning. Whether it becomes a household name or simply inspires the next generation of AI assistants, one thing’s for sure – Moshi is helping to usher in a new era where our digital interactions are more natural, nuanced, and emotionally aware than ever before.

The future of AI is here, and it speaks with an accent, understands your feelings, and doesn’t need Wi-Fi. Welcome to the world of Moshi – where artificial intelligence gets personal.

Also Read:

Ola’s Game-Changing Move: Ditching Google Maps for In-House Ola Maps

Meet Prafulla Dhariwal: The Pune Prodigy Behind OpenAI’s Revolutionary GPT-4o

The Alarming Rise of Neurological Disorders: A Global Health Crisis

Google’s Urgent Warning: Is Your Google Drive Data Really Safe?

Meet Devin, The AI Software Engineer Shaping the Future of Coding

Deepfakes: The New Face of Online Misinformation and How to Tackle It